Introduction

The Model Context Protocol (MCP) is a groundbreaking open standard designed to bridge the gap between AI models and the diverse tools and data sources they need to interact with. Created by Anthropic, MCP provides a universal interface that enables AI assistants to access real-time context from external systems securely and efficiently. This standard simplifies integration, enhances AI performance, and opens new possibilities for AI-driven applications.

What is MCP?

MCP is a protocol that allows AI models to connect to various tools and data sources in a standardized way. Think of it as a “USB-C port” for AI, a universal connector. Plug in an MCP server, and the AI model instantly gains access to the tools or data that server provides. Rather than building custom connectors for every new service, developers can use MCP as a universal adapter.

Key Components

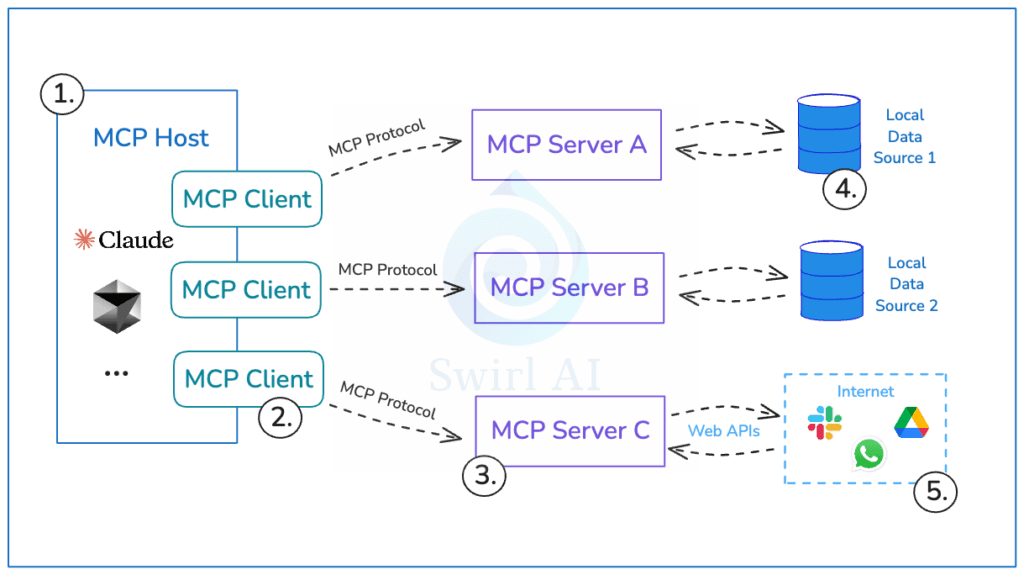

MCP Host

These are the front-end applications or interfaces (e.g. a chatbot UI, an IDE assistant, or Claude Desktop) that initiate connections and want to use external data/tools

MCP Client

Running within the host, an MCP client maintains a 1:1 connection to an MCP server. It acts as a bridge, forwarding requests from the AI to the server and returning responses

MCP Server

These are lightweight connector programs that expose specific capabilities (data or actions) through the standardized MCP interfaceEach server usually connects to a particular data source or service (for example, a filesystem, database, Slack, or GitHub API) and “advertises” its capabilities (like what data or tools it can provide).

MCP Architecture

MCP uses a client-server model. Communication is based on the JSON-RPC 2.0 standard, ensuring structured and consistent interactions. When an MCP client connects to a server, they perform a handshake to exchange protocol versions and capabilities (ensuring both sides know what the other supports). After initialization, the client can query the server for its available resources, tools, and prompts (these terms are explained below)

Communication is bidirectional: the client typically sends requests (e.g. “fetch this data” or “execute this action”) and the server returns results, but the protocol also supports one-way notifications and error handling. Under the hood, MCP uses a JSON-RPC 2.0 message format for all requests and responses, which standardizes how calls and data are structured

Servers can operate in two modes:

- Stdio: The server runs as a local subprocess, communicating via standard input/output streams (useful for local tools).

- HTTP + SSE: The server runs as a remote service, communicating over HTTP POST (for client requests) and Server-Sent Events (SSE) for streaming responses back to the client.

This flexibility means an AI assistant could connect to a local connector (like a file reader on your machine) or a cloud service connector over the network in the same consistent way. All MCP servers expose a common set of API calls (such as listing available tools or resources, invoking a tool, etc.), making them interoperable. In essence, an MCP server provides three types of things to an AI agent

MCP Capabilities

MCP servers expose three main capabilities:

1. Resources

These are read-only data inputs like files, documents, or database entries. They provide the AI with context but don’t allow modifications. Resources are typically application-controlled, meaning the AI or user must be granted access explicitly (e.g. a user chooses a file to share with the AI)

2. Tools

Actions or functions the model can invoke to affect external systems or retrieve information. These could be anything from querying an API, sending a message, to performing a calculation. Tools are model-controlled (the AI can decide to call them when needed, usually with user approval in the loop). Each tool is described to the AI with a name, a description, and an input schema (like function signature). The AI model can choose a tool and provide parameters, upon which the MCP server executes the underlying function and returns the result

3. Prompts

Predefined prompt templates or workflows that help the AI perform specialized tasks. These are user-controlled – the user (or developer) can select a prompt workflow for the AI to follow. For example, a prompt might guide the AI to follow a certain multi-step process (like an “onboarding Q&A workflow” or a code analysis routine). MCP servers can advertise available prompt templates which the client can list and present as options

How It Works

- The MCP client connects to the server.

- They exchange supported versions and capabilities.

- The server advertises available resources, tools, and prompts.

- The AI model can now use these in its reasoning and tasks.

Overall, the architecture enables an AI system to maintain context and perform actions across various tools in a unified manner. For instance, once connected, an AI assistant can see a list of available functions (tools) and data sources (resources) from all connected MCP servers. When the AI needs some information or to perform a task during a conversation, it can call the appropriate tool via the MCP client, which routes the request to the correct server. The server executes the request (e.g. queries a database or fetches a file) and returns the result, which the AI can then incorporate into its response. This design cleanly separates the AI’s reasoning (in the host) from the integration logic (in the servers) allowing AI models to extend their capabilities without hard-coding new APIs each time.

Practical Use Cases

- Enterprise Knowledge Access: An AI assistant in a company can retrieve information from internal knowledge bases, document repositories, or wikis using MCP connectors. For example, an MCP server could connect to a Google Drive or SharePoint to provide the AI with relevant documents when asked a question instead of training the model on confidential documents, the model can fetch up-to-date data securely on the fly.

- Communication and Productivity Tools: MCP allows integration with tools like Slack, email, or calendars. A chatbot could use an MCP Slack server to search through chat history or post messages, or use a calendar server to schedule meetings. These actions become standardized function calls (tools) that the AI can invoke (with permission) rather than custom plugins for each platform

- Software Development and DevOps: Development assistants can use MCP to integrate with coding environments and workflows. For instance, an IDE plugin could connect to a Git/GitHub MCP server to let the AI read the codebase or recent commits for context. Similarly, an AI agent could use a logging or monitoring MCP server to fetch recent logs, or a ticketing system server to file or update bug reports. This enables AI-driven code analysis, debugging assistance, and task automation in software engineering.

- Database and API Queries: Instead of giving an LLM direct database access or a custom query interface, a developer can set up an MCP server for their database (e.g. Postgres) or an internal API. The AI can then ask for data through a safe, logged channel. For example, an AI customer support agent might query customer info from a database via MCP, or a data science assistant might fetch the latest metrics from an analytics API. The MCP server can ensure only read-only or scoped queries are allowed as configured (preventing unauthorized access or modifications).

- Real-Time Data and External APIs: AI applications often need current information not in their training data. MCP servers can act as bridges to external APIs for weather, stock prices, maps, etc. A weather MCP server (as in a common demo) could expose a

get_forecasttool that calls a weather API. An AI travel assistant could use an airline MCP server to check flight statuses. All these become standardized tools the AI can use, improving the assistant’s usefulness by supplementing its static knowledge with live data. - Performing Actions or Transactions: Beyond just reading data, MCP tools allow AI to perform write actions in a controlled way. For example, an AI integrated in a CRM system might have an MCP tool to

createNewTicketorupdateDatabaseEntry– actions that, when invoked, carry out real operations via the MCP server’s logic. Because MCP supports granular permissioning and scoping, such actions can be constrained (e.g. only allow writing to a test environment, or require user confirmation). This opens the door to AI agents that can not only answer questions but also execute tasks (like drafting an email, logging a support ticket, or running a script) in a governed manner.

Developer Experience

SDKs and Languages

MCP SDKs are available in TypeScript, Python, Java, Kotlin, and C#. These SDKs handle the low-level communication and make it easy to implement an MCP server or client.

Server Deployment

You can run a server locally or host it remotely. For example:

npx @modelcontextprotocol/server-filesystemThis launches a local server that gives the AI access to your file system.

Integration with AI Platforms

MCP is supported by:

- Claude AI (Anthropic)

- OpenAI Agents SDK

- LangChain

- Chainlit

- Pydantic-AI

Adoption

Companies and tools using MCP include:

- Replit

- Zed IDE

- Block (Square)

- GitHub

- Slack

These companies use MCP to make their AI tools smarter, more context-aware, and better integrated into workflows.

Benefits of MCP

- Standardization: One protocol for many integrations.

- Security: Controlled access to tools and data.

- Scalability: Reuse connectors across apps.

- Extensibility: Plug in new capabilities easily.

Conclusion

MCP is revolutionizing how AI models interact with the world. By offering a standardized, secure, and extensible way to connect tools and data sources, it transforms isolated AI models into powerful, context-aware agents. As adoption grows, MCP is poised to become a foundational layer for AI-powered systems across industries.

Learn more at: https://modelcontextprotocol.io